Manufacturing excellence depends on data-driven decision-making, but how can you trust your decisions if you can’t trust your data? Data quality monitoring serves as the foundation for maintaining reliable, accurate, and actionable manufacturing information.

Whether you’re overseeing pharmaceutical production lines, managing medical device assembly, or running any regulated manufacturing operation, you’re likely dealing with an overwhelming amount of data.

Manufacturing professionals like you are facing increasing pressure to maintain perfect records while accelerating production. A single data error in your electronic batch records could trigger a lengthy investigation, halt production, or worse, lead to regulatory findings.

This guide will help you implement robust data quality monitoring that protects your operation without slowing it down.

1. Understanding Data Quality Monitoring in Manufacturing

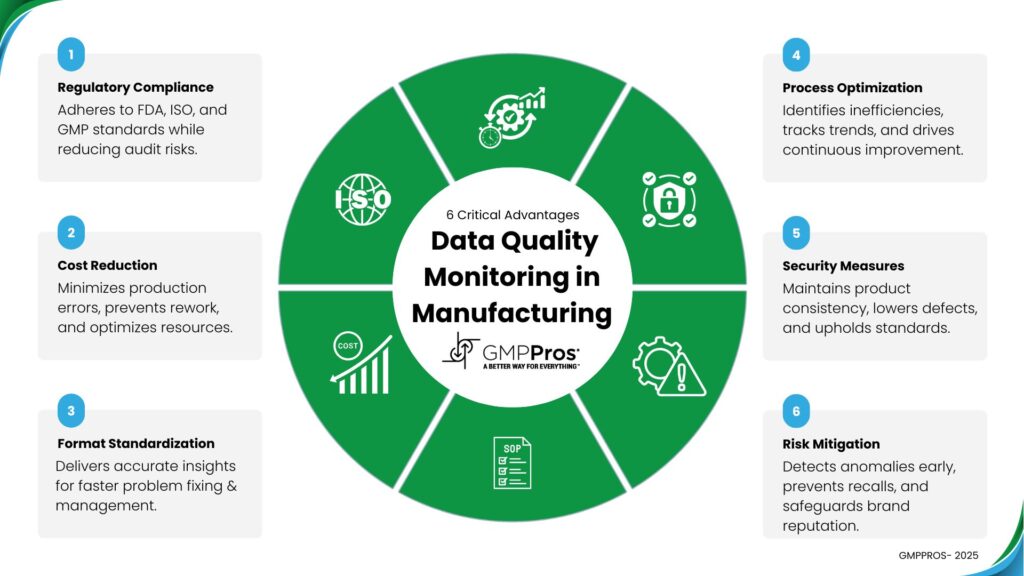

For regulated manufacturing professionals, data quality monitoring extends well beyond simple error checking. It’s not just about identifying incorrect entries; it’s about ensuring the integrity of your entire quality system. Every temperature log, every batch record, and every calibration document contributes to your compliance narrative.

1. What is Data Quality Monitoring?

Data quality monitoring represents the systematic process of evaluating, measuring, and tracking the reliability and effectiveness of your data systems. In manufacturing, this encompasses everything from batch records and quality control measurements to equipment readings and compliance documentation.

- Consider this example: A pharmaceutical manufacturer produces critical medications where precise measurements are essential. Their batch manufacturing record system collects thousands of data points per batch – from temperature readings to ingredient quantities.

Without proper monitoring, how would they know if sensors are drifting out of calibration or if data entry errors are sneaking in?

2. The True Cost of Poor Data Quality

In regulated manufacturing, the financial impact of poor data quality extends far beyond simple rework costs. Based on our work with hundreds of manufacturing facilities.

Industry research reveals the stark reality of poor data quality:

| Impact Area | Potential Cost |

| Production Delays | 5-10% of runtime lost |

| Quality Issues | 2-5% increase in defect rates |

| Compliance Risk | Up to $1M per major finding |

| Decision Delays | 20-30% longer analysis time |

| Rework Requirements | 3-7% of total production |

Data Quality Impact Comparison Across Manufacturing Sectors

| Manufacturing Sector | Avg. Data Quality Error Rate | Estimated Annual Cost of Poor Data | Primary Risk Areas |

| Pharmaceutical | 3-5% | $2.5M – $4.5M | Regulatory compliance, batch record integrity |

| Medical Devices | 2-4% | $1.8M – $3.2M | Product traceability, quality control |

| Food & Beverage | 4-6% | $1.5M – $2.7M | Safety documentation, ingredient tracking |

| Aerospace | 1-3% | $3M – $5M | Critical component documentation, safety records |

2. Essential Data Quality Metrics

Quality managers and validation specialists in regulated manufacturing environments need specific, measurable indicators to track data integrity. While general manufacturing might focus on basic error rates, regulated industries require a more nuanced approach that satisfies both operational excellence and compliance requirements. Let’s examine the metrics that matter most in GMP environments.

1: Accuracy – How correct the data is.

- Check for differences from known standards.

- Track errors in automated data collection.

- Monitor mistakes in manual data entry.

- Measure how much calibration shifts over time.

2: Completeness – How much of the needed data is available.

- Count missing data points.

- Ensure all required fields are filled.

- Identify gaps in collected data.

- Review if all necessary documentation is present.

3. Consistency – How uniform the data is across systems and time.

- Compare data across different systems.

- Check if data remains stable over time.

- Ensure measurements use the same units.

- Verify data follows a standard format.

4. Real-World Example: Batch Record Monitoring

A medical device manufacturer implemented comprehensive data quality monitoring for their batch manufacturing records. Their monitoring framework looked like this:

Monitoring Framework Example:

1. Real-time validation during data entry

– Format checking

– Range validation

– Cross-reference verification

2. Automated daily checks

– Completeness audit

– Consistency verification

– Trend analysis

3. Weekly quality reports

– Error rate tracking

– Correction frequency

– System performance metrics

3. Data Quality Monitoring Techniques That Work

For process engineers and quality professionals working in regulated manufacturing, theoretical approaches aren’t enough. You need proven techniques that have survived regulatory scrutiny and delivered consistent results.

These methods have been battle-tested in FDA-regulated facilities and refined through real-world implementation.

1. Profile-Based Monitoring

Technology transfer specialists and validation engineers understand that each product and process has its unique fingerprint.

Creating detailed data profiles isn’t just about setting limits – it’s about understanding the natural variation in your regulated processes while maintaining strict compliance requirements.

This technique involves creating detailed profiles of what “good” data looks like. For batch manufacturing records, this includes:

- Expected value ranges

- Typical relationships between variables

- Normal process variation limits

- Standard timing patterns

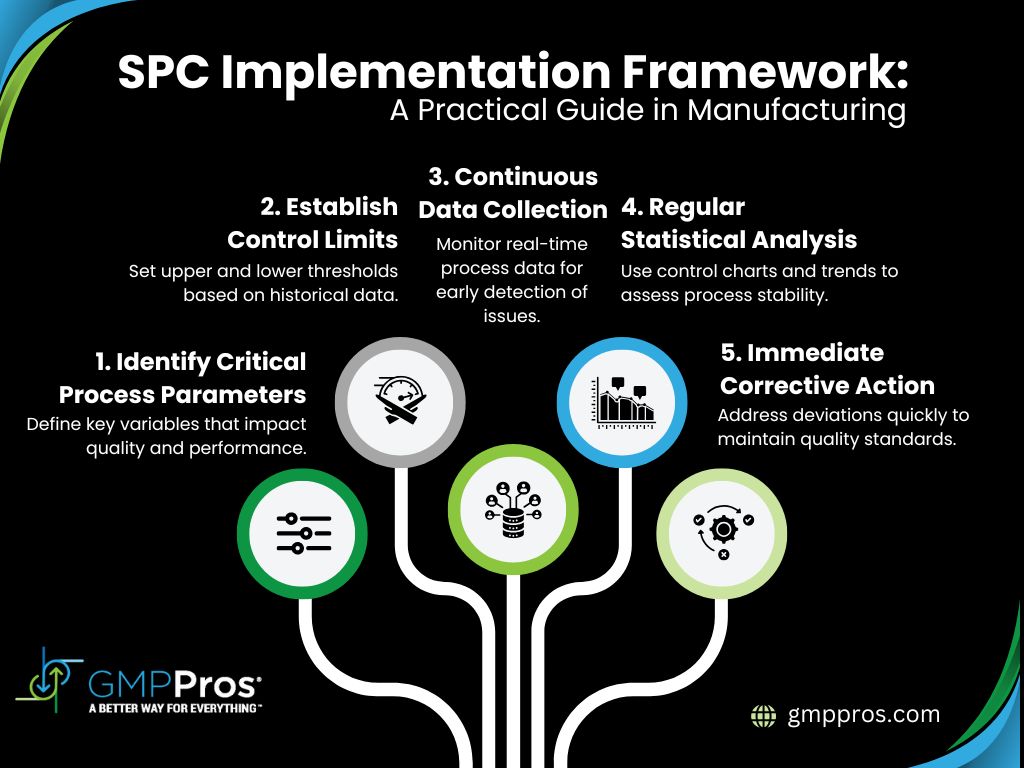

2. Statistical Process Control Integration

Statistical Process Control (SPC) goes beyond basic tracking, it’s a critical tool for maintaining product quality and demonstrating regulatory compliance. SPC helps manufacturers identify process variations, predict potential quality issues, and provide data-driven evidence of consistent manufacturing performance.

Key SPC Monitoring Techniques:

- Control Charts: Real-time visualization of process performance

- Process Capability Indices (Cp/Cpk): Quantitative measure of process stability

- Trend Analysis: Early detection of systematic shifts

- Variance Monitoring: Understanding process variability

By integrating robust SPC techniques, manufacturers can:

- Reduce product variability

- Minimize quality-related risks

- Provide auditable quality evidence

- Improve overall process efficiency

3. Advanced Detection Methods

Contemporary data quality monitoring incorporates sophisticated detection approaches:

Machine Learning-Based Detection

- Pattern recognition

- Anomaly identification

- Predictive error detection

- Automated classification

Rule-Based Validation

- Business logic enforcement

- Regulatory compliance checks

- Standard operating procedure validation

- Cross-reference verification

4. Comparative Analysis of Data Quality Monitoring Techniques

| Technique | Best For | Strengths | Limitations | Regulatory Alignment |

| Profile-Based Monitoring | Consistent, predictable processes | Detailed understanding of normal variations | Requires extensive baseline data | High (Provides clear documentation) |

| Statistical Process Control | Variability-sensitive processes | Quantitative process performance | Complex implementation | Excellent (Preferred by FDA) |

| Machine Learning Detection | Complex, data-rich environments | Predictive error identification | High initial setup cost | Emerging (Growing regulatory acceptance) |

| Rule-Based Validation | Regulated manufacturing | Strict compliance checking | Less adaptable to process changes | Very High (Direct regulatory compliance) |

4. Implementing Your Monitoring System

Implementation in regulated environments requires a careful balance between thoroughness and efficiency. Your monitoring system must satisfy regulatory requirements without creating unnecessary complexity or slowing down operations.

Here’s how successful manufacturers are achieving this balance:

Step 1: Assessment and Planning

Start with a comprehensive evaluation:

| Assessment Area | Key Questions |

| Current State | What systems generate data? |

| Pain Points | Where do errors typically occur? |

| Resources | What tools and staff are available? |

| Priorities | Which Manufacturing processes are most critical? |

Step 2: Framework Development

Create a structured approach:

- Define quality standards

- Establish monitoring procedures

- Set up alerting systems

- Create response protocols

- Document all processes

Step 3: Tool Selection

Choose appropriate tools based on your needs:

- Automated validation systems

- Statistical analysis software

- Quality management platforms

- Integration middleware

- Reporting systems

5. Best Practices for Ongoing Monitoring

Maintaining data quality in GMP environments isn’t a one-time effort. Successful manufacturers have developed systematic approaches that satisfy regulatory requirements while remaining operationally practical. These best practices have been proven effective across various regulated industries.

- Regular System Audits

- Monthly data quality reviews

- Quarterly system assessments

- Annual comprehensive audits

- Team Training and Engagement

- Regular training sessions

- Clear responsibility assignment

- Performance feedback loops

- Continuous improvement initiatives

- Documentation and Tracking

- Detailed monitoring logs

- Issue resolution records

- Change management documentation

- Performance metrics tracking

6. Future-Proofing Your Data Quality

As regulatory requirements evolve and technology advances, your data quality monitoring must keep pace. Forward-thinking manufacturers are already preparing for upcoming changes in data integrity requirements and technological capabilities.

Here’s what you need to consider for long-term compliance and efficiency:

1. Emerging Technologies

The regulatory landscape is rapidly evolving to embrace new technologies while maintaining strict compliance requirements. Understanding how to properly implement these advances within GMP constraints is crucial for maintaining competitive advantage.

- AI-powered quality checks

- Real-time monitoring systems

- Predictive analytics integration

- Blockchain for data integrity

2. Integration Opportunities

In modern regulated manufacturing, isolated systems create compliance risks. Strategic integration of your data quality monitoring with existing systems isn’t just about efficiency – it’s about creating a robust, audit-ready quality system.

- Connect with MES systems

- Link to ERP platforms

- Integrate with LIMS

- Couple with automation systems

3. Technology Integration Readiness Checklist

| Integration Area | Current Capability | Future Readiness | Recommended Action | Priority Level |

| AI-Powered Quality Checks | Limited | Low to Moderate | Invest in training and pilot programs | High |

| Real-Time Monitoring Systems | Partial | Moderate | Upgrade existing infrastructure | Medium |

| Predictive Analytics | Minimal | Low | Develop data science capabilities | High |

| Blockchain Data Integrity | Not Implemented | Very Low | Explore pilot projects | Low to Medium |

7. Taking Action

Every day you operate without proper data quality monitoring is a compliance risk. Whether you’re facing upcoming audits or simply want to improve operational efficiency, the time to act is now. Here’s your practical roadmap to implementation.

Implementing effective data quality monitoring doesn’t happen overnight, but you can start with these steps:

- Assess your current data quality state

- Identify critical monitoring points

- Select appropriate tools and techniques

- Train your team on new processes

- Implement monitoring gradually

- Measure and adjust as needed

Remember, quality data drives quality decisions. By implementing robust data quality monitoring, you’re not just protecting your data – you’re protecting your entire operation’s integrity and effectiveness.

Conclusion

In regulated manufacturing, data quality is critical – not merely a compliance checkbox, but a fundamental driver of product safety and operational excellence. The metrics and methodologies discussed provide a strategic framework for proactive data quality monitoring.

This approach enables early detection of potential issues, mitigates risks of costly production errors, and maintains regulatory compliance.

Effective data quality management serves as a strategic safeguard, protecting organizational reputation and operational integrity. The potential consequences of inadequate monitoring are significant: regulatory penalties, production disruptions, and substantial financial and reputational damage.

GMP Pros offers targeted, professional data quality solutions:

- Comprehensive System Assessment: Rigorous diagnostic analysis to identify systemic vulnerabilities in your current data management infrastructure

- Tailored Monitoring Solutions: Custom-engineered monitoring frameworks aligned with your specific operational requirements

- Technical Skills Transfer: Specialized training programs to enhance your team’s data quality management capabilities

Our engineering expertise addresses the complex challenges inherent in regulated manufacturing environments. We provide strategic, technically robust solutions that transform data quality from a potential liability into a competitive operational advantage. Interested in elevating your data quality management? Contact GMP Pros for a professional consultation focused on your specific organizational needs.