The pharmaceutical industry faces constant scrutiny regarding data integrity in clinical trials. When pharmaceutical companies develop new drugs or medical devices, they must navigate the web of pharma compliance requirements that directly impact patient safety, product efficacy, and ultimately, market authorization.

Despite its critical importance, maintaining data integrity throughout clinical trials remains a persistent challenge for even the most experienced organizations.

Recent FDA warning letters reveal that data integrity violations continue to plague the industry. In 2023 alone, approximately 40% of FDA citations involved some form of data integrity issue.

These violations ranged from incomplete audit trails to questionable handling of out-of-specification results. And the consequence of all this were delayed approvals, costly remediation programs, and damaged corporate reputations.

Core Data Integrity Principles in Clinical Trials

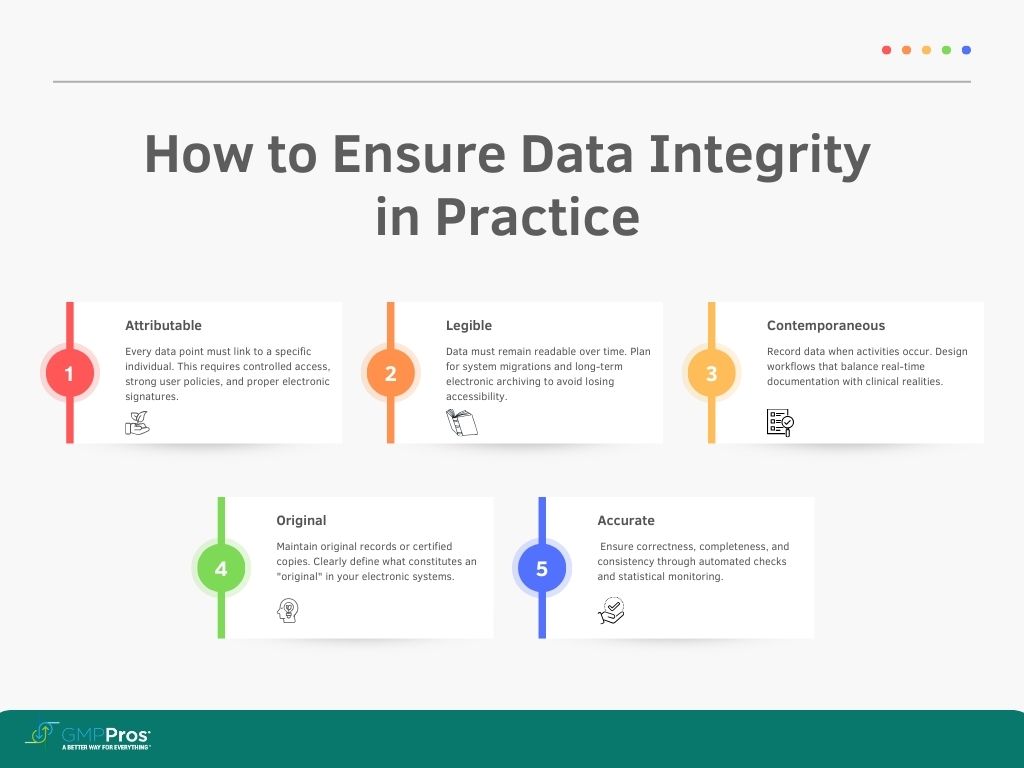

Most pharma professionals recognize the ALCOA+ framework, but applying these principles consistently across complex multinational trials often proves challenging. Let’s examine these principles from a practical implementation perspective:

Attributable

Each data point must connect to a specific individual. This sounds straightforward until you consider the complexities of modern clinical trials involving dozens of sites, hundreds of patients, and multiple electronic systems.

Practical attribution requires thoughtful user management policies, controlled system access, and meaningful electronic signatures.

Legible

Data must remain readable throughout its lifecycle. With electronic systems largely replacing paper records, legibility concerns have shifted toward data migration, system transitions, and long-term electronic archiving.

Many companies discover too late that older electronic records become effectively illegible when systems change without proper data migration planning.

Contemporaneous

Recording data when activities occur prevents memory-based errors and potential fabrication. However, immediate documentation isn’t always practical in busy clinical settings.

Well-designed workflows must balance contemporaneous documentation with clinical realities, providing realistic timeframes and clear expectations for timely entry.

Original

Maintaining original records (or certified copies) preserves data integrity. Yet determining what constitutes an “original” in complex electronic landscapes presents ongoing challenges.

- Is it the electronic case report form (eCRF)?

- The source document?

- Both?

Clear policies must define originals within your specific technology ecosystem.

Accurate

Beyond simple correctness, accuracy encompasses completeness and consistency. Ensuring accuracy requires multiple layers of verification, from automated range checks to statistical monitoring for anomalous patterns that might indicate data problems.

The “+” in ALCOA+ adds crucial dimensions that regulators increasingly emphasize:

Complete

Partially documented trials raise immediate red flags for regulators. Missing data points, unexplained gaps in documentation, and incomplete audit trails suggest potential manipulation. Robust monitoring practices must verify completeness throughout the trial lifecycle.

Consistent

Data should follow expected patterns and relationships. Deviations require investigation and explanation. Modern data analytics tools can identify inconsistencies that might escape human reviewers, flagging potential issues for further examination.

Enduring

Data must survive throughout required retention periods, which often extend decades beyond study completion. Electronic systems obsolescence, company mergers, and organizational changes all threaten data durability without proper planning.

Available

Regulators expect prompt access to trial data upon request. This requires not just physical storage but organized systems that enable efficient retrieval, especially during high-pressure inspection situations.

Regulatory Framework for Data Integrity

While regulatory authorities worldwide emphasize similar principles, their specific requirements and enforcement approaches vary significantly.

FDA regulations center around 21 CFR Part 11 for electronic records, alongside predicate rules governing good clinical practice. The FDA increasingly focuses on data governance systems rather than mere technical compliance, as reflected in their 2018 Data Integrity Guidance.

Recent enforcement actions demonstrate the agency’s expectation that companies implement holistic approaches to data integrity rather than checkbox-style compliance.

Meanwhile, the European Medicines Agency (EMA) approaches data integrity through GCP inspections and its 2020 guidance document.

European regulators typically emphasize risk-based approaches and quality management systems over prescriptive technical requirements. This difference in emphasis creates challenges for global trials seeking to satisfy multiple authorities simultaneously.

The International Council for Harmonisation (ICH) GCP E6(R2) guideline attempts to bridge these regional differences through its quality risk management approach. Its risk-based monitoring provisions acknowledge limited resources while emphasizing protection of critical data. The forthcoming E6(R3) revision promises even more flexibility for innovative trial designs while maintaining core data integrity expectations.

Companies operating globally must design compliance programs that satisfy the most stringent requirements from all relevant jurisdictions, essentially implementing a “highest common denominator” approach. This often results in more conservative practices than might be strictly necessary for any single market.

Common Data Integrity Challenges in Clinical Trials

The transition to decentralized trials has introduced new data integrity vulnerabilities. When patients collect their own data or participate remotely, traditional oversight mechanisms break down. Companies struggle to verify that data collection occurs according to protocol when direct observation isn’t possible.

Some organizations have successfully addressed this challenge through real-time video monitoring, enhanced ePRO (electronic patient-reported outcome) validation, and wearable devices with tamper-evident features. However, these solutions introduce their own compliance challenges regarding privacy, data transmission, and system validation.

System interoperability presents another persistent headache. The average clinical trial employs between 4-7 major electronic systems, from CTMS (Clinical Trial Management System) to EDC (Electronic Data Capture) to IVRS (Interactive Voice Response System).

Data must flow seamlessly between these systems without corruption or unauthorized modification. Interface validation, reconciliation procedures, and data transfer verification become critical control points.

Third-party vendor management creates additional complications. Most sponsors outsource significant portions of their trials to CROs, central labs, and technology providers. When data integrity issues arise at vendor organizations, sponsors remain ultimately responsible.

Robust qualification processes, ongoing oversight programs, and clear quality agreements help mitigate these risks, but many companies still struggle with effective vendor governance.

How the Pandemic Altered the Pharma Compliance

COVID-19 forced an unprecedented experiment in clinical trial flexibility. Practically overnight, traditional site-based monitoring became impossible. Protocol deviations skyrocketed as patients couldn’t attend scheduled visits. Supply chain disruptions threatened investigational product availability.

Regulatory authorities responded with remarkable pragmatism. The FDA issued guidance allowing protocol modifications without prior approval when necessary to protect participants. The EMA published similar flexibility provisions. These changes preserved countless trials that might otherwise have collapsed under rigid compliance expectations.

This experience permanently altered the pharma compliance landscape. Remote monitoring, once viewed skeptically by regulators, gained legitimacy through necessity. Electronic consent processes accelerated adoption by years. Direct-to-patient drug shipments became acceptable in previously restrictive markets.

However, these pandemic-driven adaptations created new compliance concerns. Remote source data verification raised questions about documentation quality. Home-based assessments introduced standardization challenges. Alternative sample collection methods required validation studies.

Companies that successfully navigated these changes typically embraced risk-based approaches, focusing intensive monitoring on critical data points while accepting higher uncertainty in less crucial areas.

They implemented enhanced centralized monitoring to detect anomalies that might have been caught during on-site visits. They documented pandemic-related changes thoroughly, providing clear rationales for protocol modifications.

The pandemic taught valuable lessons about compliance resilience. Organizations with flexible quality systems adapted more effectively than those with rigid, procedure-driven approaches. Companies that had already implemented risk-based monitoring found the transition far less disruptive than those relying on 100% source data verification.

Building a Robust Data Integrity Program

Effective data integrity programs balance comprehensive coverage with practical implementation. Overly ambitious programs often fail when they overwhelm available resources or create unsustainable documentation burdens. Successful programs typically follow the following principles.

Start with a thorough risk assessment.

Not all data carries equal importance. Critical data directly impacts patient safety and primary efficacy endpoints, while other information serves supporting purposes.

Implement tiered governance structures.

Data integrity requires oversight at multiple levels, from executive commitment to day-to-day operational controls.

Clear roles and responsibilities prevent confusion during critical situations. Senior management must demonstrate visible commitment to data integrity principles, reinforcing their importance throughout the organization.

Develop practical SOPs that staff can actually follow.

Overly complex procedures invite workarounds and unofficial “shadow practices.” Effective SOPs balance regulatory requirements with operational realities, providing clear guidance without unnecessary constraints.

Regular SOP reviews make sure that they remain relevant as practices evolve.

Validate computerized systems proportionate to risk.

Full GAMP 5 validation makes sense for critical data systems but creates unnecessary burden for lower-risk applications. Risk-based validation approaches focus intense scrutiny on high-risk functionality while applying lighter touch to less critical features.

Establish meaningful monitoring metrics.

Traditional quality metrics often incentivize the wrong behaviors, focusing on documentation perfection rather than data integrity. Effective metrics measure both process adherence and outcome quality, providing early warning of potential issues.

Regulatory and Compliance Implications for AI and ML in Pharma

Artificial intelligence and machine learning technologies have a tremendous potential for enhancing clinical trials while creating novel pharma compliance challenges. These technologies fundamentally differ from traditional clinical systems in their ability to evolve, learn from data, and make autonomous decisions.

Regulatory frameworks struggle to keep pace with AI/ML innovation. The FDA’s proposed framework for AI/ML as medical devices provides some insight into their thinking, emphasizing “predetermined change control plans” that establish boundaries for algorithmic learning. Similar approaches likely apply to AI/ML used in clinical trial data analysis.

Validation of AI/ML systems defies traditional approaches. The very nature of these technologies involves continuous learning and evolution, contradicting the “fixed functionality” assumption underlying conventional validation.

Forward-thinking companies implement staged validation processes that verify initial functionality, establish performance metrics, and monitor ongoing system behavior rather than attempting one-time certification.

Bias represents a particularly insidious threat in AI/ML applications. Algorithms trained on historically biased clinical data will perpetuate and potentially amplify those biases. Companies must implement explicit bias detection and mitigation strategies, including diverse training datasets and algorithmic fairness testing, to prevent these issues.

Documentation requirements for AI/ML systems extend beyond traditional system documentation. Companies must maintain records of training data, model development processes, performance metrics, and evolution over time. These records must explain not just what the system does but how it reaches its conclusions.

Explainability has emerged as perhaps the greatest compliance challenge for advanced AI systems. Regulators increasingly expect that clinical decisions supported by AI algorithms must be explainable to human reviewers.

This creates tension between advanced “black box” algorithms that may offer superior performance and more interpretable models that provide clearer explanations of their decision-making processes.

Training and Personnel Considerations

Even perfect systems cannot overcome human factors in data integrity. Research consistently shows that data integrity breaches often stem from personnel issues rather than technical failures.

Effective training programs address both procedural knowledge and underlying rationales, helping staff understand not just how to follow procedures but why those procedures matter.

Building a compliance culture requires more than periodic training sessions. Organizations must actively demonstrate that they value data integrity over expedience or favorable results. This means recognizing and rewarding ethical behavior, responding appropriately to integrity concerns, and never penalizing staff for raising potential issues.

Role-specific training recognizes that different positions face different data integrity challenges. Data managers need different skills from clinical monitors, who in turn face different situations than site coordinators. Tailored training addresses these specific needs rather than taking a one-size-fits-all approach.

Vendor personnel present special challenges. Sponsors cannot directly control their training or daily practices yet remain responsible for the integrity of data they generate. Effective vendor management includes verifying training programs, conducting periodic assessments, and establishing clear quality agreements that specify data integrity expectations.

Audit and Inspection Readiness

Regulatory inspections test both documented processes and actual practices. Organizations that focus exclusively on documentation often discover painful gaps when inspectors observe real operations. Truly inspection-ready companies maintain alignment between their stated procedures and daily activities.

Internal audit programs have a big role in maintaining this alignment. Effective audits go beyond checking boxes to critically evaluate whether processes actually produce reliable data. This requires auditors with both technical knowledge and critical thinking skills who can identify potential vulnerabilities that might escape notice in routine reviews.

Mock inspections provide invaluable preparation opportunities. These simulations should replicate actual inspection conditions as closely as possible, including unannounced elements and challenging scenarios.

The most valuable mock inspections intentionally probe potential weak points rather than confirming known strengths.

Remote inspections, accelerated during the pandemic, appear likely to continue in some form. These virtual reviews present unique challenges in document presentation, system demonstrations, and communication clarity.

Companies must adapt their inspection readiness approaches to address these differences, including technology preparations, virtual presentation skills, and electronic document organization.

Data Integrity in Global Clinical Trials

Multi-regional trials face data integrity challenges beyond those of single-country studies. Cultural differences significantly impact data collection quality and consistency. In some regions, hierarchical relationships may discourage site staff from reporting problems to sponsors. In others, cultural concepts of time affect the contemporaneous documentation principle.

Cross-border data transfers trigger complex regulatory requirements, particularly when European or Chinese data travels to other regions. GDPR in Europe, PIPL in China, and various national regulations create a compliance maze that requires careful navigation. Data localization requirements in some countries further complicate global data management strategies.

Translation introduces additional data integrity risks. Subtle meaning changes during translation can affect everything from informed consent to adverse event descriptions. Back-translation verification helps catch these issues but adds complexity to document control processes.

Successful global trials implement standardized processes adapted to local contexts. This seemingly contradictory approach requires identifying core data integrity principles that must remain consistent worldwide while allowing flexibility in implementation details to accommodate local regulations and practices.

Technological Solutions for Enhanced Data Integrity

Technology offers powerful tools for strengthening data integrity when properly implemented. Electronic data capture systems with robust audit trails, validation checks, and security features significantly reduce many traditional data integrity risks.

Modern EDC systems automatically verify data against established parameters, flag potential issues for review, and maintain comprehensive metadata about every data point.

Blockchain technology has moved beyond theoretical applications into practical pilot programs for specific data integrity challenges. Its inherent immutability makes blockchain particularly valuable for establishing trusted timestamps, verifying document authenticity, and creating tamper-evident audit trails.

However, blockchain implementations must overcome significant challenges regarding scalability, regulatory acceptance, and integration with existing systems.

Automated data verification tools employ statistical algorithms to identify anomalous patterns that might indicate data issues. These tools can detect problems that might escape human reviewers, such as statistical impossibilities, unexpected correlations, or subtle patterns suggesting procedural deviations.

When deployed prospectively during trials, these tools enable early intervention before problems become widespread.

Real-time compliance monitoring dashboards give study teams visibility into data quality metrics, allowing prompt intervention when issues emerge. These dashboards track metrics like query rates, protocol deviation frequency, and data entry timeliness, providing early warning of potential problems.

The most effective implementations integrate data from multiple sources to provide comprehensive oversight rather than system-specific views.

Implementing a Data Integrity Remediation Program

When data integrity issues arise, effective remediation requires systematic approaches rather than quick fixes. The first step involves comprehensive gap assessment to identify both symptomatic problems and their root causes. This assessment must examine not just where failures occurred but why existing controls didn’t prevent or detect them.

Prioritization frameworks help companies address the most critical issues first when resources cannot tackle everything simultaneously. These frameworks typically consider patient safety impact, regulatory significance, and effect on study conclusions when determining remediation sequence.

Effective corrective action plans address both immediate issues and systemic weaknesses. They establish clear timelines, assign specific responsibilities, and include verification steps to confirm effectiveness. The best plans anticipate potential implementation challenges and include contingency provisions.

Communication with regulatory authorities requires careful balance. Transparency about issues and remediation efforts builds credibility, but premature commitments can create compliance problems if timelines slip or unexpected complications arise.

Successful regulatory communications typically include regular progress updates, realistic timelines, and clear explanations of remediation rationales.

We’ll Help You Stay Pharma Compliant

Maintaining data integrity in clinical trials requires expertise, vigilance, and adaptation to evolving regulatory expectations. GMP Pros helps pharmaceutical companies build robust compliance programs that protect data integrity while supporting efficient operations.

Our team of engineers brings deep experience in regulated manufacturing environments, giving you practical solutions to complex compliance challenges. Our pharmaceutical regulatory compliance services can help you achieve and maintain the highest standards in the healthcare industry.

Data integrity is about making sure that clinical trial results are trustworthy, supporting decisions that ultimately impact patient lives.